The Stanford Center for Research on Foundation Models (CRFM) has conducted a revealing assessment of how well AI foundation model providers, such as OpenAI and Google, are currently aligned with the draft requirements of the European Union’s (EU) proposed Artificial Intelligence Act. This Act, though not yet enforced, is seen as the world’s first comprehensive regulation designed to govern AI, carrying significant influence due to the EU’s large population and the potential for the regulation to set global precedents for AI governance.

In their study, the CRFM researchers found that most AI foundation model providers do not currently meet the proposed requirements of the not-yet-enforced EU AI Act. There is a noticeable lack of adequate information disclosure regarding the data, compute, and deployment of their models, as well as the key characteristics of the models themselves. Specifically, many providers do not comply with requirements to detail the use of copyrighted training data, the hardware used and emissions produced in training, and how they evaluate and test models.

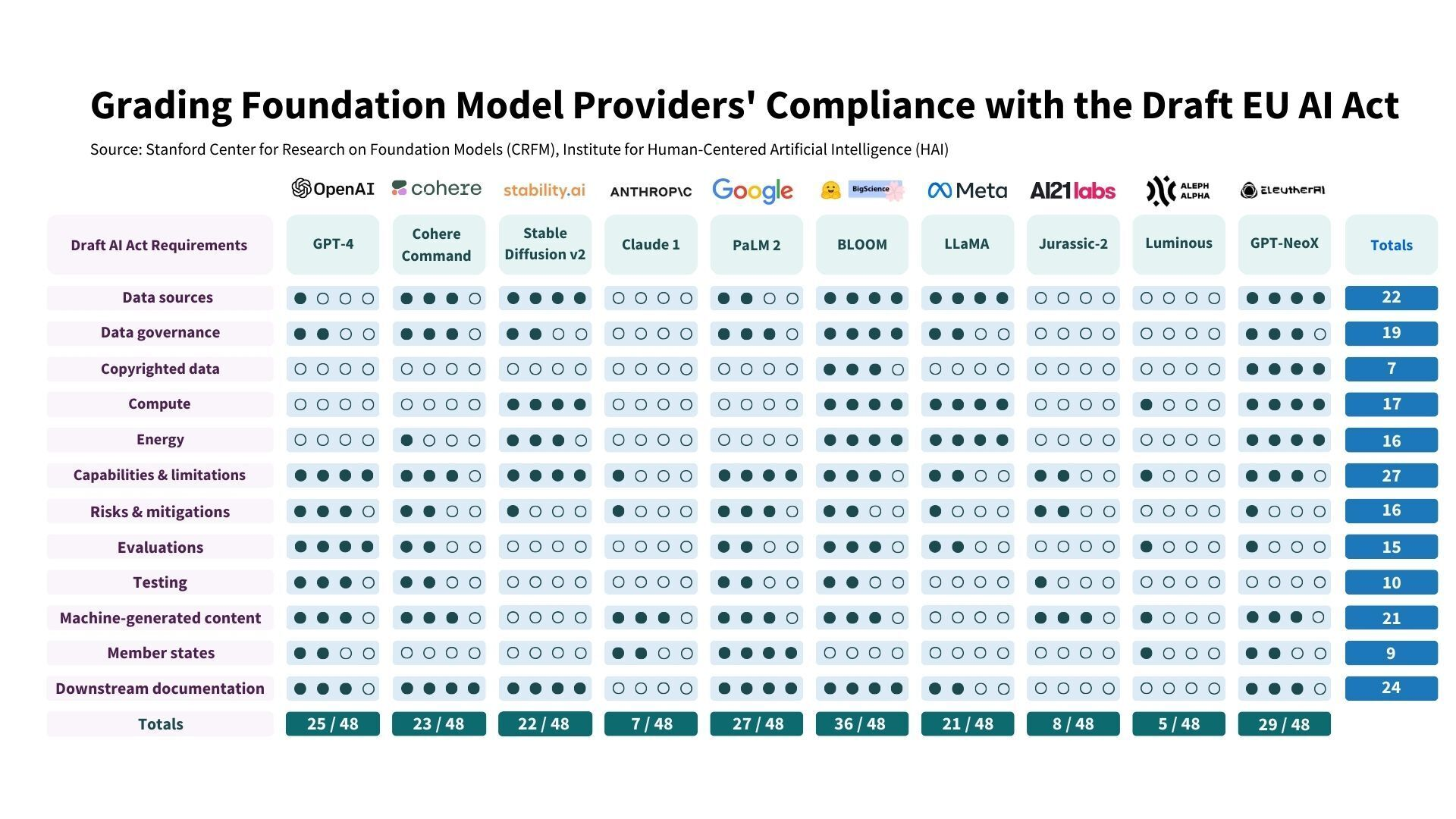

The study involved a detailed process of extracting 22 requirements from the Act that are directed towards foundation model providers. Out of these, 12 were selected for meaningful evaluation based on publicly available information. These were categorized as pertaining to data resources, compute resources, the model itself, and deployment practices. A 5-point rubric was designed for each requirement, and the compliance of 10 foundation model providers was assessed. The results demonstrated a significant range in compliance across model providers, with some scoring less than 25% and only one provider scoring at least 75%.

The study identified four areas where many organizations receive poor scores: copyrighted data, compute/energy use, risk mitigation, and evaluation/testing. These areas represent persistent challenges that need to be addressed by the AI industry. The researchers emphasized that transparency should be prioritized to hold foundation model providers accountable, and that significant changes would be brought about by the future enforcement of the AI Act.

Source and more information: Stanford Center for Research on Foundation Models